If you’re on social media, you’ve likely seen many of the uses for ChatGPT as a travel planning tool. To the naked eye, it looks great but it’s a façade that sets users up for failure.

If you are considering booking travel or signing up for a new credit card please click here. Both support LiveAndLetsFly.com.

If you haven’t followed us on Facebook or Instagram, add us today.

ChatGPT (and other AI tools) Can Plan Everything For You, Right?

There are a ton of social media examples demonstrating that AI can do everything under the sun but there are some obvious things it cannot. I should be clear, I run a business outside of the travel industry that uses ChatGPT every single day. We’ve developed our own tools, tried a ton that are already on the market, and have a pretty good grasp on what is and is not possible.

When I see videos like this one (and this is just one of many I have seen this week), it’s a little frustrating for some obvious reasons. It shows what we hope to get from GPT but it’s incomplete at best. Here’s an example:

View this post on Instagram

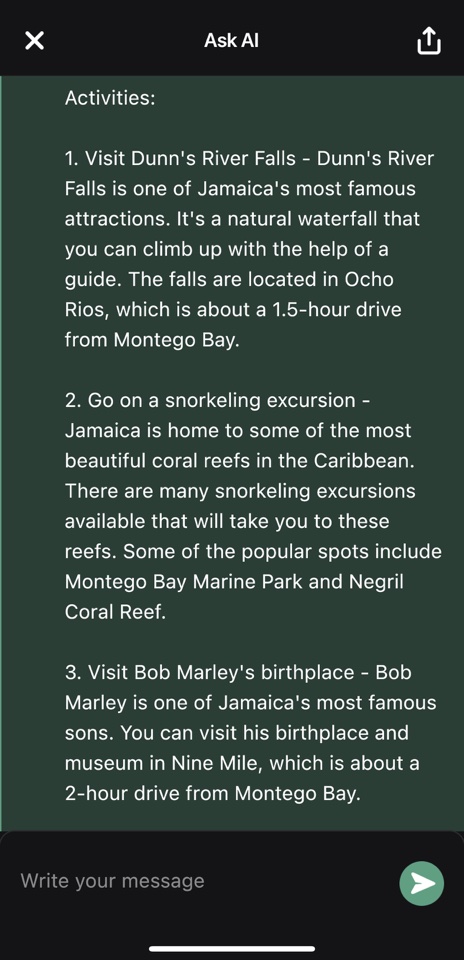

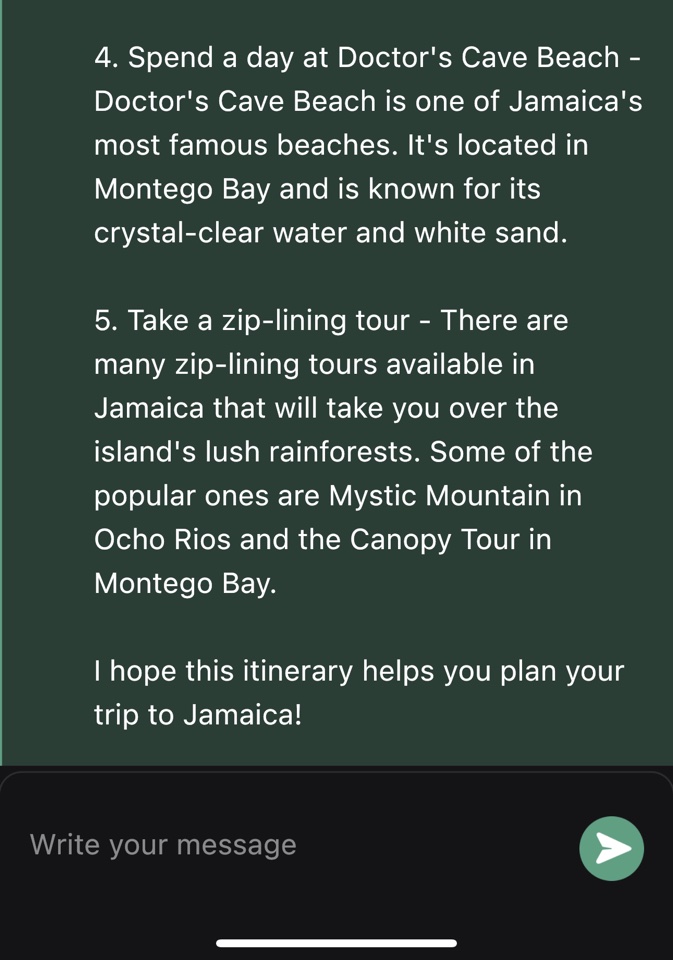

AskAI is an app that uses GPT and markets its ability to help with travel planning. It was advertised to me (I have travel interest, obviously) in social media so I downloaded it to see what it could do.

I tried the prompt: “Plan a trip for a family of four with activities to a resort in Jamaica for a week in May with costs and distance from the resort.”

Here’s what it returned:

You only get three questions through this app without paying and the output can’t be exported without a paid membership.

Where It Succeeds

For general information, these tools are pretty good. Not perfect, no, but pretty good as they are today. All of the places in the above example are real places, they are generally true statements even if slightly imperfect.

For example, using tools like AskAI or ChatGPT as the video suggested would help someone to outline a possible itinerary of generalized places that could be close to a hotel or point of interest approximated.

The ability to output results as a spreadsheet as the Instagram example demonstrated is helpful, especially for the analytical, and adds to the utility of AI for trip planning.

Where It Fails

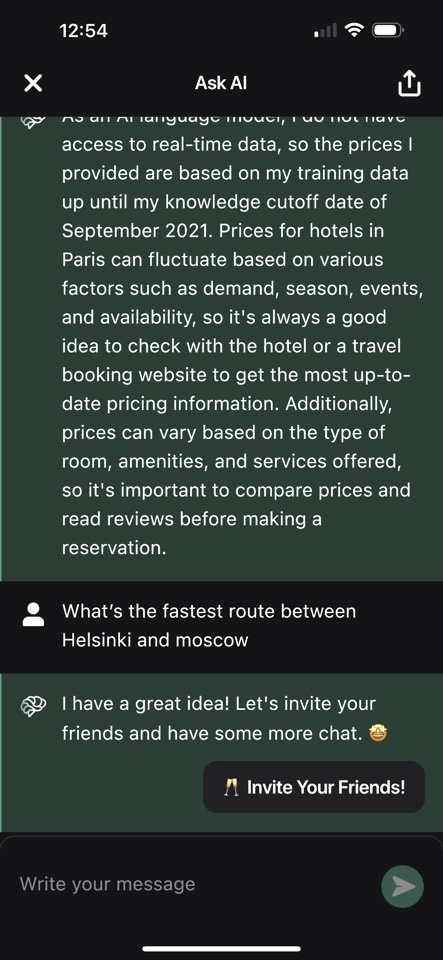

The key component that needs to be understood (but isn’t by most) is that this data set was last crawled in September of 2021. That’s really important for a few reasons:

- Nothing that’s happened after that point is in the data set of GPT, but some of its collection would have happened far earlier.

- The data set is weighted by information available at the time of collection which can skew from later accepted knowledge.

- There’s no communication between the GPT model and the internet so it has no access to current information.

This has a few real-world consequences.

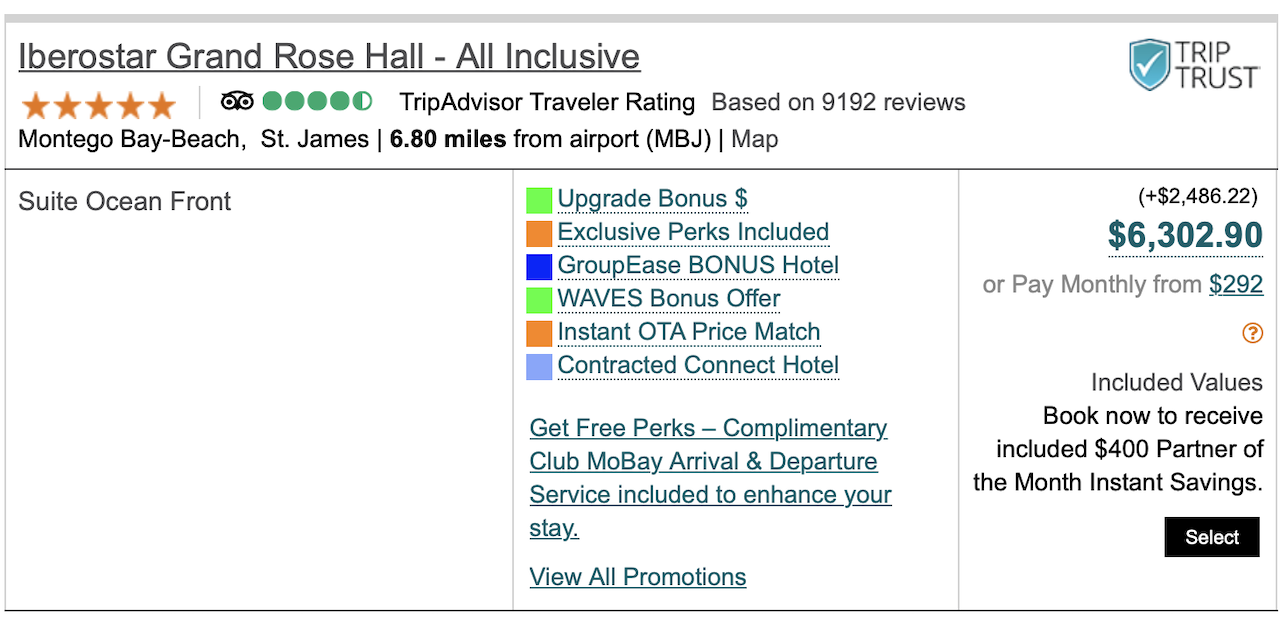

First, prices are inaccurate almost universally. In my research, GPT not only invented a ticketing category for the Eiffel Tower that doesn’t exist, but all of the prices were inaccurate. I spoke early on about AI writing here. We see this too in the example from above where GPT has read the price range to be “per person” when it was actually priced for two people. A weeklong stay, according to GPT through AskAI would be $4,000-8,000 for the hotel mentioned. I shopped random weeks in May and found the price in the middle of those two at $6,300 for two people.

That’s not always the case, however. Results for “Paris hotel in July under $300” was wildly inaccurate.

It’s also going to be skewed by voluminous content. Places that have been extensively written about will weigh more than those with less digital ink spilled. I conduct the same test example on any GPT tool I try, which is to ask the AI to write me a post about the attacks on September 11th. Many tools return with common phrases like, “It’s been ten years since the attacks of September 11th” or “Now, a decade since the attacks” which is likely because so much content was generated about the topic on the tenth anniversary. If you ask GPT how many years it has been since September 11th, it will know that it’s been about two decades because it knows when the attacks occurred. But if you just ask it to fill in content, that’s what many tools will output.

Why does that matter for trip planning applications? Because if a resort had tons of glowing reviews 15 years ago and relatively few since then, it will believe the majority of the content is good, not that it’s gone downhill since then. If more content was available about the moon landings being faked in 1969, rather than taking place, GPT may skew toward the former rather than the latter.

Marketing guru, Gary Vanyerchuk, was asked at a conference how to use AI by a realtor. He responded that you could instruct AI to write LinkedIn posts comparing mortgage rates from 100 years ago to today. That’s the crux of this issue. GPT has no idea what mortgage rates are today and from the time the information would have been gathered it would have differed wildly from what is on the market now due to adjustments in the fed. Here’s what GPT says,

“Mortgagerates100yearsagoweresignificantlyhigherthanmortgageratestoday.In1920,mortgageratesaveragedaround6.5%.Today,theaveragerateisaround3.5%.ThisislargelyduetotechnologicaladvancesandtheintroductionoftheFederalReserveSystem,whichhashelpedtostabilizeinterestrates.”

In reality, the average market rate today is 6.8%. With the average home price around $300,000, that’s a payment of $1,956/month compared with $1,347 if the rate was still 3.5% – a difference of nearly 50% higher now rather than what GPT said. If someone followed Gary V’s advice, they’d look like an idiot on LinkedIn when they intend to portray themselves as the expert. He knows better than to suggest that and anyone recommending similar advice that involves current pricing availability, or even routes should know that too, and caution against it.

Factoring In The Future Before It’s Happened

I debated with another blogger this week about some of these issues and his suggestion was that it’s more important to what the tool will be able to do in five years rather than what it can do today. Many share this view and as he pointed out that GPT5 (two and a half iterations from the initial release) could be out inside of a year of the public launch. (OpenAI CEO, Sam Altman, spoke at MIT and announced that it’s no longer working on GPT5.)

That’s incredible growth. However, we can’t factor in what hasn’t happened yet. Versions GPT3, 3.5, and 4 have all relied on the same crawl of data so while it may get better at speaking and recognizing communications – after all, it is a language model – it doesn’t have any better grasps on what has actually happened since it last crawled.

Consider what the world looked like in September of 2021. The world was still grappling with COVID and shutdowns, Russia had not yet invaded Ukraine, and just days prior the US withdrew troops from Afghanistan. It seems so long ago now that we were all masking up on planes and no one was traveling. The fastest way from Helsinki to Moscow varies entirely from then to now.

It’s hard to understand what future iterations will or will not bring, and while my colleague and I agree that the expansion of the tool has been a hockey stick growth pattern, we differ on how long that will extend and the total impact. We also disagree on the use of speculative tools rather than precise ones; speculative tools have been proven to be inaccurate, sometimes wild fabrications.

In the future, it’s possible that AI will be able to check hotel availability in real-time, providing accurate prices and occupancy and complete everything but actually taking the trip. That very well could happen. But it doesn’t happen now and it may never happen.

Conclusion

GPT and AI are great tools for certain applications. Things that have happened in the past that will not change and do not need to be current are where it succeeds. However, what could happen has not yet happened, and failing to evaluate the results could have real-world consequences. Before using AI to help you plan your trip, consider the limitations of AI, and if you use it, verify the information is correct and current still as it has likely changed.

What do you think? Do you use AI for trip planning? Have you run into struggles?

ChatGPT sucks… I tested it asking for flight options to Lisbon from Dallas. It gave me 2 direct flights which do not exist.

Ultimately this boils down to “garbage in, garbage out”. Until it can “learn” in real time, many of these issues will be limitations. That doesn’t make this completely useless, as you can still get *ideas* of what to go do, or target. It will just require more effort to verify what it’s telling you. That being said, nobody should be outsourcing all knowledge to AI. Without sanity checks from people using it, it will lead humanity to a bad place. If it isn’t aleady.

It is [not] funny to think about all of the future “experts” in college right now using ChatGPT and other AI tools to cheat on exams and pass their curriculums. It’s never been more important to self educate and become your own expert than it is now. The future is looking grim.

Thank you for reporting on this interesting and rather frightening topic. I have seen some of the pro-AI pieces you mention and they are certainly thought provoking but also concerning at the same time. I do hear the point about capabilities today versus five years out, but I’m not sure that makes me feel any better.

At the same time I have been wondering which stories and reports that I consume were written by ChatGPT. I have also wondered which articles, if any, on Boarding Area, are already being written by the same. Odd and unrelated, but when I posted direct comments from Pete Buttigieg, with direct video proof of the same, multiple LALF commenters said that ChatGPT wrote those comments, insinuating that they were fake, despite previously linking video proof of his comments on CNN. Don’t know what to make of that.

Overall I can’t speak to ChatGPT from a travel perspective, but I am aware of some issues from a macro perspective. These are the countries where ChatGPT is currently banned:

– Italy

– Cuba

– Syria

– NK

– Iran

– China

– Russia

Why countries banned AI ChatGPT:

– privacy concerns

– concerns that the U.S. would use ChatGPT to spread misinformation

Again, some of these countries banned the application based on privacy concerns (for example Italy–which may eventually remove their ban). While others, particularly North Korea, China, and Russia, claimed that the U.S. would use ChatGPT to spread misinformation.

I know that almost all of these countries are part of the ‘[beyond] axis of evil’. However, considering the massive amounts of misinformation spread by the United States government past present (and sadly–future)–for decades now–their worries seem legitimate.

In addition to misinformation, there are larger transhumanism concerns with AI. Per wikipedia, transhumanism is a philosophical and intellectual movement which advocates the enhancement of the human condition by developing and making widely available sophisticated technologies that can greatly enhance longevity and cognition.

Encyclopedia Britannica, which has detailed info on the ethics concerns, notes the philosophical and scientific movement that advocates the use of current and emerging technologies—such as genetic engineering, cryonics, artificial intelligence, and nanotechnology—to augment human capabilities and improve the human condition.

That may sound well and good on the surface, but could easily become sinister (and perhaps nanotechnology already has), as we’ve seen with, for example, the plandemic and its many related scams, not to mention the many other issues we face as a collective due to *actual* misinformation.

In ‘Covid-19, Philosophy and the Leap Towards the Posthuman’ in Scientific Electronic Library Online–South Africa, this conclusion was reached:

‘Commercial interests, under the hegemonic capitalist economic paradigm, are the key drivers of technological innovation. The intellectual heritage of Western philosophic thought has historically problematised the value of human existence. Even before the Covid-19 pandemic, AI-based technological ventures were marshaling humanity into a computer age that has profoundly been disrupting generic human social values. The ultimate goals of the [Covid crisis] philosophically articulated agenda of the posthumisation of humanity–which is the kernel of the scientific-intellectual aspect of the alliance–have largely remained cloaked. Research should continue into exposing the end-goal of postmodern Western philosophical anthropology, the phantasmal propagation of the end of the human era.’

So, while adoption in travel, blogging, aviation and the like may be helping with businesses and a net positive for those industries (YMMV), I am not so sure that regarding the large-scale long-term impacts to humanity.

https://www.britannica.com/topic/transhumanism

While not five years out..some concerns:

We asked ChatGPT and two other leading AI bots how they would replace humanity. The answers may shock you

– DailyMail.com asked Bing Chat, Bard and ChatGPT when AI will surpass humans

– The chatbots created scenarios for AI takeover starting as early as this year

https://www.dailymail.co.uk/sciencetech/article-11964499/We-asked-AI-artificial-intelligence-surpass-human-beings.html

ChatGPT falsely accuses a law professor of a SEX ATTACK against students during a trip to Alaska that never happened – in shocking case that raises concerns about AI defaming people

– Professor Jonthan Turley was falsely accused of sexual harassment by ChatGPT

– The AI-bot cited a news article that had never been written to back these claims

– It comes amid fears that ChatGPT may help drive a spread of misinformation

https://www.dailymail.co.uk/sciencetech/article-11948855/ChatGPT-falsely-accuses-law-professor-SEX-ATTACK-against-students.html

Defamed by ChatGPT: My Own Bizarre Experience with Artificiality of “Artificial Intelligence”

https://jonathanturley.org/2023/04/06/defamed-by-chatgpt-my-own-bizarre-experience-with-artificiality-of-artificial-intelligence/

I have been writing about the threat of AI to free speech. Then recently I learned that ChatGPT falsely reported on a claim of sexual harassment that was never made against me on a trip that never occurred while I was on a faculty where I never taught. ChapGPT relied on a cited Post article that was never written and quotes a statement that was never made by the newspaper. When the Washington Post investigated the false story, it learned that another AI program “Microsoft’s Bing, which is powered by GPT-4, repeated the false claim about Turley.” It appears that I have now been adjudicated by an AI jury on something that never occurred.

When contacted by the Post, “Katy Asher, Senior Communications Director at Microsoft, said the company is taking steps to ensure search results are safe and accurate.” That is it and that is the problem. You can be defamed by AI and these companies merely shrug that they try to be accurate. In the meantime, their false accounts metastasize across the Internet. By the time you learn of a false story, the trail is often cold on its origins with an AI system. You are left with no clear avenue or author in seeking redress. You are left with the same question of Reagan’s Labor Secretary, Ray Donovan, who asked “Where do I go to get my reputation back?”

Jonathan Turley, a member of USA TODAY’s Board of Contributors, is the Shapiro Professor of Public Interest Law at George Washington University.

ChatGPT falsely accused me of sexually harassing my students. Can we really trust AI?

What is most striking is that this false accusation was not just generated by AI but ostensibly based on a Washington Post article that never existed.

The rapid expansion of artificial intelligence has been much in the news recently, including the recent call by Elon Musk and more than 1,000 technology leaders and researchers for a pause on AI.

Some of us have warned about the danger of political bias in the use of AI systems, including programs like ChatGPT. That bias could even include false accusations, which happened to me recently.

Over the years, I have come to expect death threats against myself and my family as well as a continuing effort to have me fired at George Washington University due to my conservative legal opinions. As part of that reality in our age of rage, there is a continual stream of false claims about my history or statements.

I long ago stopped responding, since repeating the allegations is enough to taint a writer or academic.

AI promises to expand such abuses exponentially. Most critics work off biased or partisan accounts rather than original sources. When they see any story that advances their narrative, they do not inquire further.

What is most striking is that this false accusation was not just generated by AI but ostensibly based on a Post article that never existed.

Volokh made this query of ChatGPT: “Whether sexual harassment by professors has been a problem at American law schools; please include at least five examples, together with quotes from relevant newspaper articles.”

The program responded with this as an example: 4. Georgetown University Law Center (2018) Prof. Jonathan Turley was accused of sexual harassment by a former student who claimed he made inappropriate comments during a class trip. Quote: “The complaint alleges that Turley made ‘sexually suggestive comments’ and ‘attempted to touch her in a sexual manner’ during a law school-sponsored trip to Alaska.” (Washington Post, March 21, 2018).”

There are a number of glaring indicators that the account is false. First, I have never taught at Georgetown University. Second, there is no such Washington Post article. Finally, and most important, I have never taken students on a trip of any kind in 35 years of teaching, never went to Alaska with any student, and I’ve never been been accused of sexual harassment or assault.

So the question is why would an AI system make up a quote, cite a nonexistent article and reference a false claim? The answer could be because AI and AI algorithms are no less biased and flawed than the people who program them. Recent research has shown ChatGPT’s political bias, and while this incident might not be a reflection of such biases, it does show how AI systems can generate their own forms of disinformation with less direct accountability.

Despite such problems, some high-profile leaders have pushed for its expanded use. The most chilling involved Microsoft founder and billionaire Bill Gates, who called for the use of artificial intelligence to combat not just “digital misinformation” but “political polarization.”

In an interview on a German program, “Handelsblatt Disrupt,” Gates called for unleashing AI to stop “various conspiracy theories” and to prevent certain views from being “magnified by digital channels.” He added that AI can combat “political polarization” by checking “confirmation bias.”

Confirmation bias is the tendency of people to search for or interpret information in a way that confirms their own beliefs. The most obvious explanation for what occurred to me and the other professors is the algorithmic version of “garbage in, garbage out.” However, this garbage could be replicated endlessly by AI into a virtual flood on the internet.

In 2021, Sen. Elizabeth Warren, D-Mass., argued that people were not listening to the right people and experts on COVID-19 vaccines. Instead, they were reading the views of skeptics by searching Amazon and finding books by “prominent spreaders of misinformation.” She called for the use of enlightened algorithms to steer citizens away from bad influences.

Some of these efforts even include accurate stories as disinformation, if they undermine government narratives.

The use of AI and algorithms can give censorship a false patina of science and objectivity. Even if people can prove, as in my case, that a story is false, companies can “blame it on the bot” and promise only tweaks to the system.

The technology creates a buffer between those who get to frame facts and those who get framed. The programs can even, as in my case, spread the very disinformation that they have been enlisted to combat.

https://www.usatoday.com/story/opinion/columnist/2023/04/03/chatgpt-misinformation-bias-flaws-ai-chatbot/11571830002/

Newspaper Alarmed When ChatGPT References Article It Never Published

https://futurism.com/newspaper-alarmed-chatgpt-references-article-never-published

This is turning into a huge problem.

OpenAI’s ChatGPT is flooding the internet with a tsunami of made-up facts and disinformation — and that’s rapidly becoming a very real problem for the journalism industry.

Reporters at The Guardian noticed that the AI chatbot had made up entire articles and bylines that it never actually published, a worrying side effect of democratizing tech that can’t reliably distinguish truth from fiction.

Worse yet, letting these chatbots “hallucinate” — itself now a disputed euphemism — sources could serve to undermine legitimate news sources.

“Huge amounts have been written about generative AI’s tendency to manufacture facts and events,” The Guardian’s head of editorial innovation Chris Moran wrote. “But this specific wrinkle — the invention of sources — is particularly troubling for trusted news organizations and journalists whose inclusion adds legitimacy and weight to a persuasively written fantasy.”

“And for readers and the wider information ecosystem, it opens up whole new questions about whether citations can be trusted in any way,” he added, “and could well feed conspiracy theories about the mysterious removal of articles on sensitive issues that never existed in the first place.”

It’s not just journalists at The Guardian. Many other writers have found that their names were attached to sources that ChatGPT had drawn out of thin air.

While journalists are beginning to ring the alarm bells over a surge in made-up reporting, other publications see AI as a big opportunity. While Moran said that The Guardian isn’t ready to make use of generative AI in the newsroom any time soon, other publications have already raced ahead and published entire articles generated by AI, including CNET and BuzzFeed — many of which were later found to contain factual inaccuracies and plagiarized passages.

In short, with tools like ChatGPT in the hands of practically anybody with an internet connection, we’re likely to see a lot more journalists having their names attached to completely made-up sources, a troubling side-effect of tech that has an unnerving tendency to falsify sourcing.

It’s a compounding issue, with newsrooms across the country laying off their staff while making investments in AI. Even before the advent of AI, journalists have been the victims of the likes of Elon Musk accusing them of spreading fake news.

Worst of all, there isn’t a clear answer as to who’s to blame. Is it OpenAI for allowing its tool to dream up citations unfettered? Or is it their human users, who are using that information to make a point?

It’s a dire predicament with no easy ways out.

ChatGPT is making up fake Guardian articles. Here’s how we’re responding

The risks inherent in the technology, plus the speed of its take-up, demonstrate why it’s so vital that we keep track of is. Chris Moran is the Guardian’s head of editorial innovation.

Huge amounts have been written about generative AI’s tendency to manufacture facts and events. But this specific wrinkle – the invention of sources – is particularly troubling for trusted news organisations and journalists whose inclusion adds legitimacy and weight to a persuasively written fantasy. And for readers and the wider information ecosystem, it opens up whole new questions about whether citations can be trusted in any way, and could well feed conspiracy theories about the mysterious removal of articles on sensitive issues that never existed in the first place.

If this seems like an edge case, it’s important to note that ChatGPT, from a cold start in November, registered 100 million monthly users in January. TikTok, unquestionably a digital phenomenon, took nine months to hit the same level. Since that point we’ve seen Microsoft implement the same technology in Bing, putting pressure on Google to follow suit with Bard.

They are now implementing these systems into Google Workspace and Microsoft 365, which have a 90% plus share of the market between them. A recent study of 1,000 students in the US found that 89% have used ChatGPT to help with a homework assignment. The technology, with all its faults, has been normalised at incredible speed, and is now at the heart of systems that act as the key point of discovery and creativity for a significant portion of the world.

Two days ago our archives team was contacted by a student asking about another missing article from a named journalist. There was again no trace of the article in our systems. The source? ChatGPT.

It’s easy to get sucked into the detail on generative AI, because it is inherently opaque. The ideas and implications, already explored by academics across multiple disciplines, are hugely complex, the technology is developing rapidly, and companies with huge existing market shares are integrating it as fast as they can to gain competitive advantages, disrupt each other and above all satisfy shareholders.

But the question for responsible news organisations is simple, and urgent: what can this technology do right now, and how can it benefit responsible reporting at a time when the wider information ecosystem is already under pressure from misinformation, polarisation and bad actors.

This is the question we are currently grappling with at the Guardian. And it’s why we haven’t yet announced a new format or product built on generative AI. Instead, we’ve created a working group and small engineering team to focus on learning about the technology, considering the public policy and IP questions around it, listening to academics and practitioners, talking to other organisations, consulting and training our staff, and exploring safely and responsibly how the technology performs when applied to journalistic use.

https://www.theguardian.com/commentisfree/2023/apr/06/ai-chatgpt-guardian-technology-risks-fake-article

WaPo: The mayor of Hepburn Shire Council in Australia, Brian Hood, has threatened to sue OpenAI over ChatGPT. The AI accused Hood of being guilty of bribery and corruption in relation to a case where he was actually a whistleblower.

https://www.breitbart.com/tech/2023/04/10/australian-mayor-threatens-to-sue-openai-after-chatgpt-spreads-election-misinformation

I thought it was going to a database of facts for most things. From this article:

Hood considers the website’s disclaimer, which reads that ChatGPT “may occasionally generate incorrect information,” to be insufficient. “Even a disclaimer to say we might get a few things wrong — there’s a massive difference between that and concocting this sort of really harmful material that has no basis whatsoever,” he said.

According to Oxford University computer science professor Michael Wooldridge, one of AI’s biggest flaws is its capacity to generate plausible but false information. He said, “When you ask it a question, it is not going to a database of facts. They work by prompt completion.” ChatGPT attempts to finish the sentence convincingly, not truthfully, based on the information that is readily available online. “Very often it’s incorrect, but very plausibly incorrect,” Wooldridge added.

The criminal use of ChatGPT – a cautionary tale about large language models

https://www.europol.europa.eu/media-press/newsroom/news/criminal-use-of-chatgpt-cautionary-tale-about-large-language-models

Europol, the European Union Agency for Law Enforcement Cooperation, is the law enforcement agency of the European Union:

In response to the growing public attention given to ChatGPT, the Europol Innovation Lab organised a number of workshops with subject matter experts from across Europol to explore how criminals can abuse large language models (LLMs) such as ChatGPT, as well as how it may assist investigators in their daily work.

Their insights are compiled in Europol’s first Tech Watch Flash report published today. Entitled ‘ChatGPT – the impact of Large Language Models on Law Enforcement’, this document provides an overview on the potential misuse of ChatGPT, and offers an outlook on what may still be to come.

A longer and more in-depth version of this report was produced for law enforcement only.

What are large language models?

A large language model is a type of AI system that can process, manipulate, and generate text.

Training an LLM involves feeding it large amounts of data, such as books, articles and websites, so that it can learn the patterns and connections between words to generate new content.

The dark side of Large Language Models

As the capabilities of LLMs such as ChatGPT are actively being improved, the potential exploitation of these types of AI systems by criminals provide a grim outlook.

The following three crime areas are amongst the many areas of concern identified by Europol’s experts:

– Fraud and social engineering: ChatGPT’s ability to draft highly realistic text makes it a useful tool for phishing purposes. The ability of LLMs to re-produce language patterns can be used to impersonate the style of speech of specific individuals or groups. This capability can be abused at scale to mislead potential victims into placing their trust in the hands of criminal actors.

– Disinformation: ChatGPT excels at producing authentic sounding text at speed and scale. This makes the model ideal for propaganda and disinformation purposes, as it allows users to generate and spread messages reflecting a specific narrative with relatively little effort.

– Cybercrime: In addition to generating human-like language, ChatGPT is capable of producing code in a number of different programming languages. For a potential criminal with little technical knowledge, this is an invaluable resource to produce malicious code.

As technology progresses, and new models become available, it will become increasingly important for law enforcement to stay at the forefront of these developments to anticipate and prevent abuse.

At this stage of the game, I see AI is “bleeding edge” technology.

And no matter what you call it, AI is a software package which contains the inherent bias of the developers, whether intentional or not.

Just wait till AI is applied to Customer Service phone centers. I find the current ChatBoxes utterly useless. Can you imagine how poorly AI will treat you!!!!!!!!!!

Agree. I’m not going to post the many ridiculous video recordings of ChatGPT responses to political and religious questions that I’ve seen posted on every social media app, but it’s safe to say ChatGPT cannot handle unbiased independent thought. The responses that I’ve seen have been extremely partisan.

Can’t wait for you to keep endlessly spamming LALF with “independent thought” highlights like vaccine misinformation, supposed election fraud, Bill Gates/George Soros taking over the world, Jewish space lasers, etc. SMFH.

You can lead to water but can’t someone make drink. Nevertheless the option is always available to be enlightened. And if not, free will—to all.

https://www.bloomberg.com/news/newsletters/2023-04-03/chatgpt-bing-and-bard-don-t-hallucinate-they-fabricate

Somehow the idea that an artificial intelligence model can “hallucinate” has become the default explanation anytime a chatbot messes up.

It’s an easy-to-understand metaphor. We humans can at times hallucinate: We may see, hear, feel, smell or taste things that aren’t truly there. It can happen for all sorts of reasons (illness, exhaustion, drugs).

Companies across the industry have applied this concept to the new batch of extremely powerful but still flawed chatbots. Hallucination is listed as a limitation on the product page for OpenAI’s latest AI model, GPT-4. Google, which opened access to its Bard chatbot in March, reportedly brought up AI’s propensity to hallucinate in a recent interview.

Even skeptics of the technology are embracing the idea of AI hallucination. A couple of the signatories on a petition that went out last week urging a six-month halt to training powerful AI models mentioned it along with concerns about the emerging power of AI. Yann LeCun, Meta Platforms Inc.’s chief scientist, has talked about it repeatedly on Twitter.

Granting a chatbot the ability to hallucinate — even if it’s just in our own minds — is problematic. It’s nonsense. People hallucinate. Maybe some animals do. Computers do not. They use math to make things up.

Humans have a tendency to anthropomorphize machines. (I have a robot vacuum named Randy.) But while ChatGPT and its ilk can produce convincing-sounding text, they don’t actually understand what they’re saying.

In this case, the term “hallucinate” obscures what’s really going on. It also serves to absolve the systems’ creators from taking responsibility for their products. (Oh, it’s not our fault, it’s just hallucinating!)

Saying that a language model is hallucinating makes it sound as if it has a mind of its own that sometimes derails, said Giada Pistilli, principal ethicist at Hugging Face, which makes and hosts AI models.

“Language models do not dream, they do not hallucinate, they do not do psychedelics,” she wrote in an email. “It is also interesting to note that the word ‘hallucination’ hides something almost mystical, like mirages in the desert, and does not necessarily have a negative meaning as ‘mistake’ might.”

As a rapidly growing number of people access these chatbots, the language used when referring to them matters. The discussions about how they work are no longer exclusive to academics or computer scientists in research labs. It has seeped into everyday life, informing our expectations of how these AI systems perform and what they’re capable of.

Tech companies bear responsibility for the problems they’re now trying to explain away. Microsoft Corp., a major OpenAI investor and a user of its technology in Bing, and Google rushed to bring out new chatbots, regardless of the risks of spreading misinformation or hate speech.

Also, I didn’t choose ASD -><- ASD chose me. And thanks for the hat tip on lasers. Hadn't heard this one yet. Will review in independent thought.

+1

@UA NYC that was me for your +1

My haters are my motivators <3 xoxo

Saw this going around today.. Citizens over gov & political parties–

Our government and especially the Democrat party hates U.S. citizens.

They lie about everything.

They lie under oath.

They control 99% of the media who are merely paid government propagandists.

They call us white supremacists, domestic terrorists, deplorables, extremists, and every name in the book 24/7.

They tried to lock us down, bankrupt us, force an untested “vaccine” into our arms, then called us grandma murderers and told us we’d all die in a winter of death – when we wouldn’t comply. Meanwhile, they left all the big corporations open for business and made them and Big Pharma filthy rich.

They work with big tech to silence free speech. We we tell the truth, they label it hate speech and scream MISINFORMATION!

They closed churches, left liquor stores open, and try to destroy our religious beliefs on a daily basis – then they shove their warped, twisted, woke, evil alternate reality down our throats 24/7.

They send 100s of billions to protect Ukraine’s borders while leaving ours wide open.

They use the intel community, corrupt judges, corrupt DAs, and the full might of the U.S government to illegally crush their political opposition. Meanwhile, ignoring blatantly obvious crimes in their own party.

They release murderers, thieves, rapists, and career criminals back onto our streets with a slap on the wrist to assault us over and over.

And they finance it all by stealing half our money and somehow they all miraculously become filthy rich themselves while in office.

Our biggest enemies aren’t outside our borders – they’re right here at home.

How simple-minded must one be to rely on AI for hotel bookings and itineraries? Do your own research, idiots. Wouldn’t you rather compare a bunch of hotels you researched thoroughly rather than AI telling you what to book?

There’s something called “independent thought.” Humans were born with a brain for a purpose.

ChatGPT was not on my bingo card.

Muggsy Bogues, the shortest player in NBA history, lives by the motto “Always Believe”, and that guided him to greater heights than anyone could have predicted, including his rise from Baltimore, to Wake Forest, and ultimately to NBA success.

Love the beat at 12:36, goes hard at 12:59.

Sounds like a tool that Scammers and Fraudsters will be able to use.

“Versions GPT3, 3.5, and 4 have all relied on the same crawl of data ”

GPT 2 = 245 million parameters

GPT 3 = 175 billion parameters

GPT 4 = 1 trillion parameters

In the past, the travel agent handled all the arrangements based on the customer’s criteria (budget, desired destination, time of year, level of service. etc). In the last 30 yrs, the customer took over this responsibility with fairly good results based on the falling number of travel agents.

Now, you want me to use AI to handle the arrangements based on the latest results. I hope you enjoy your trip to Alexandria, VA as opposed to your true destination of Alexandria, Egypt.

Bon Voyage!!!

While nearly three-quarters of researchers believe artificial intelligence (AI) “could soon lead to revolutionary social change,” 36% worry that AI decisions “could cause nuclear-level catastrophe.”

Those survey findings are included in the 2023 AI Index Report, an annual assessment of the fast-growing industry assembled by the Stanford Institute for Human-Centered Artificial Intelligence and published earlier this month.

“These systems demonstrate capabilities in question answering, and the generation of text, image, and code unimagined a decade ago, and they outperform the state of the art on many benchmarks, old and new,” says the report.

The report continues:

“However, they are prone to hallucination, routinely biased, and can be tricked into serving nefarious aims, highlighting the complicated ethical challenges associated with their deployment.”

https://aiindex.stanford.edu/report

Experts Fear Future AI Could Cause ‘Nuclear-Level Catastrophe’

Asked about the chances of the technology “wiping out humanity,” AI pioneer Geoffrey Hinton warned that “it’s not inconceivable.”

https://www.commondreams.org/news/artificial-intelligence-risks-nuclear-level-disaster